Choosing an ideal AI model depends on narrowing the use case, considering the deployment needs and data security policies. There are also other critical factors and specifications that influence the decision to choose an ideal model. Comprehending these factors involved can help you make the optimal choice – picking a powerful model will be an overkill & less efficient; picking a less efficient model will yield sub-optimal results.

This article discusses a few key factors to consider while choosing an AI model for your project. Let’s also explore how these factors apply to some of the most commonly used models available in the market.

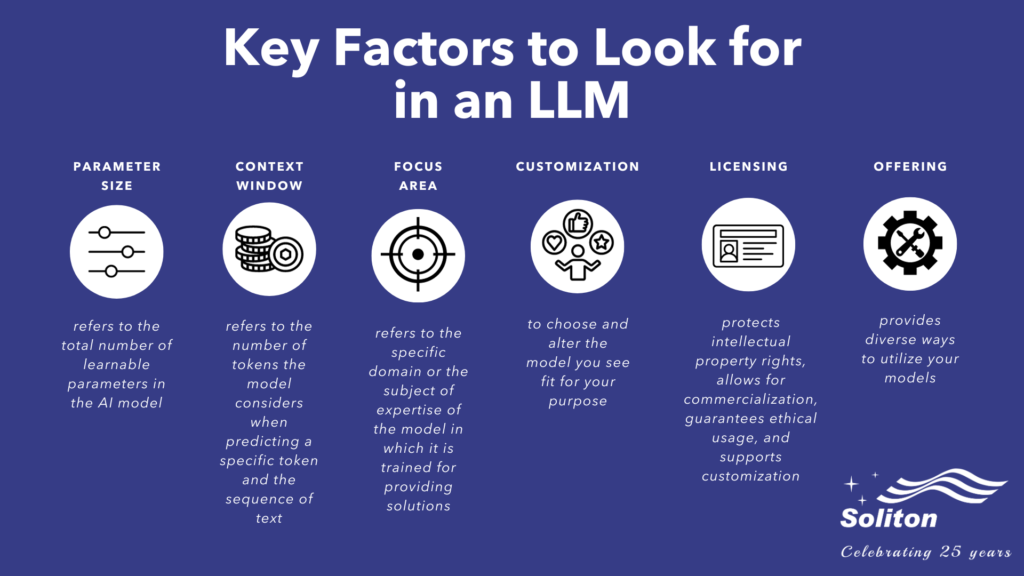

Some Key Factors to Look for in an LLM

Key factors to look for in an LLM

Key factors to look for in an LLM

An AI model has unique specifications and behavior depending on the use case, pricing, and training. Here are a few essential factors to keep an eye on when choosing one:

Parameter Size

The parameter size refers to the total number of learnable parameters in the AI model. These parameters are variables that the model uses to make predictions and generate text based on the input it receives. The common expressions indicating a ‘‘large’’ or ‘‘small’’ model are predominantly based on the parameter size. Though a larger parameter size gives more scope for learning and is more expressive, it also requires massive computation capacities.

Another point to note is that a large model does not always indicate the smartness of the model and does not mean it will work for your needs; instead, its smartness and usefulness depend on the training data and the model for a specific use case.

Context Length / Context Window

This factor refers to the number of tokens the model considers when predicting a specific token and the sequence of text. Each model has a limitation in number of tokens that it can support. A larger content window enables the user to ask detailed questions with examples and details, prompting specific and more detailed answers.

Consider an example where the user wants to run a sentiment analysis from a product feedback list. This would mean the feedback list input size and the generated sentiment analysis output size should ideally fit within the supported context length for a proper resolution. In some cases, we need to provide examples and descriptions for the model to perform sentiment analysis through input prompts; this results in an increase in the token length and quickly fills the available context window, resulting in errors or inaccurate results.

Choosing the context window with care, considering your use case, the expected input and the deliverable output size can help scale your model to provide optimal results.

Focus Area

The focus area refers to the specific domain or the subject of expertise of the model in which it is trained for.

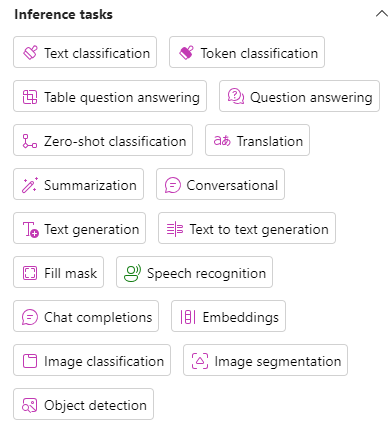

ChatGPT, a renowned LLM, excels in diverse tasks, including programming, content generation, and translation, making it highly experienced in these areas. Some specific models are great at summarization. However, they might fail at generating even the simplest of code. Platforms like Microsoft Azure can help you filter models depending on the focus area that best suits your needs.

Below is an illustration of possible tasks to help shortlist a suitable model:

Choosing the focus area of the AI model depending on your use case

Choosing the focus area of the AI model depending on your use case

This ability of the model to answer a wide range of queries on a specific focus area depends on the data with which it is trained. Therefore, you need to review your requirements or expectations for the model and then pick a model based on its Focus Area.

Customization

Another factor to consider when choosing a model is understanding if the model can be customized, i.e., discerning if the model can be fine-tuned for specific user needs. In some cases, the chosen model might not be able to provide the expected response. In such cases, it is necessary to pick a model close to your needs and see if it can be customized further to provide the expected performance.

The availability of training data and the base model that was used for the training are important factors in determining means to improve a model’s capability & performance.

Licensing

Licensing is pivotal when picking a model as it protects intellectual property rights, allows for commercialization, guarantees ethical usage, and supports customization.

Choosing a model with licensing terms that align with your use cases is essential for those aiming to deploy AI models in business or commercial settings. Having an in-depth picture of AI model licensing prevents picking a model primarily intended for research when you desire to deploy it for commercial uses.

Licensing is an important consideration while picking a model since it could void the use of several models, helping to reduce the choice dilemma.

Offering

Various AI models are available with differing licensing agreements, context window sizes, and parameter configurations. However, they also come with diverse ways to utilize their models.

Certain vendors offer fully downloadable models that you can easily install and run on your system. Conversely, some service-based providers offer access to LLMs without revealing the model’s inner workings or training specifics, allowing users to ask questions or make requests and pay on a pay-as-you-go basis. For instance, GPT 3.5 and GPT 4 operate as a service, whereas Llama 2 is available as a downloadable version on your system, providing you the flexibility to choose a suitable model depending on your preferences.

Occasionally, the cost of using pay-as-go services is less than buying and running a model on a self-hosted GPU and could reduce overheads to get up and running during model evaluation or prototyping stages.

Delving Deep into the Key Factors of Some Popular LLMs

From Llama to GPTs, tech giants like Meta, Google, and Microsoft offer a range of unique AI models. Here is a table that depicts some popular models’ key factors and specifications:

|

Model Name |

Parameter Size | Context Window | Focus Area | Customization | License | Offering |

|

GPT 3.5 |

175 billion | 4K, 16K | NLP, Language Translation, Text Completion, Code Generation, etc.

|

Model Index for Researchers | OpenAI License Agreement | As a Service |

| GPT 4 | 1 trillion (approximately) | 8K, 32K | Same as GPT 3.5 but with better performance | OpenAI License Agreement |

As a Service |

|

| Llama 2 | 7B, 13B, 70B | 4K | Claiming to be same as GPT 3.5 | Fine-tuned for chat purposes | Apache License 2.0 |

As a Service |

|

T5 |

T5-small, T5-base, T5-large, T5-3b, T5-11b (depending on the parameter size) | Machine Translation, Document Summarization | Pre-trained and Fine-tuned variations are there like Flan-T5 | Apache License 2.0 | As a Service | |

| Bloom | 176b | Apache License 2.0 |

As a Service |

Table depicting the key specifications of popular AI models

Let us compare the specifications and features of two mainstream models in greater detail below:

GPT 3.5 and GPT 4

The GPT 3.5 and GPT 4 products of OpenAI are prominent figures in the AI landscape.

GPT 3.5, with its 175 billion parameters, played a pivotal role in popularizing large language models (LLMs) to the general audience. In comparison, GPT 4, an upgrade of GPT 3.5, boasts improved performance and accuracy with broader learning and reasoning capabilities while optimized for chat-based interactions.

Both models operate under OpenAI’s licensing agreement, featuring context windows of 8k and 32k tokens with corresponding pay-as-you-use offerings. Owing to their substantial parameter sizes, tokens, and versions, GPT models excel across various domains and offer extensive customization options, making them adaptable for diverse applications.

Llama2

Llama2 appeared as a game-changer in the space of generative AI. This model comes with an Apache 2.0 license tailored for commercial use, unlike its predecessor, Llama 1, which was licensed exclusively for research purposes.

Llama2 offers variants with 7, 13, and 70 billion parameter sizes. With a 4K token size, it is a worthy choice on par with OpenAI’s GPT models.

However, it is a downloadable model that requires robust computing infrastructure for optimal performance, making it a versatile yet resource-intensive choice for businesses.

Choosing the Right Model with the Right Specification Matters

When exploring AI models and gathering information to select the right one for your project, the factors mentioned above might be essential for your project’s success. Analyzing the factors that make up these models and understanding how they are harnessed differently by various models can provide a comprehensive insight into your requirements.

Getting started with AI is just the beginning. These models continuously improve over time with enhanced features and specifications. However, the true success of the project comes from choosing a suitable model with the essential features that align best with your goals and objectives.

Interested to know more about the nuances of implementing AI into your project? Dive into our blog section to explore more and stay ahead of the curve.