In today’s rapidly evolving technology landscape, the scope offered by Artificial Intelligence is limitless. Its ability to solve complex real-world problems with ease and efficiency is often a game-changer.

While we marvel at the output of generative AI, such as LLMs and other language models being able to provide contextual answers to any user query within their scope of knowledge, we often fail to see the “Life Cycle” behind development of such an AI-enabled solution.

As people start to apply AI in their projects and seek out ways to provide better solutions to problems, they might be confronted with questions like “What does a typical AI project look like?” or “What does it take to streamline the project development and achieve vast improvements through effective integration of AI?”. Under such circumstances, understanding the project life cycle can be critical to develop a highly effective AI-powered application.

In this blog, let’s explore an ideal project life cycle involved or required to develop an AI-powered application, from defining the scope to deploying the application.

The Need for Project Life Cycle of Applied AI

Irrespective of the domain and use case, the Applied AI life cycle concerns goes beyond just AI / Data Science engineers or software developers involved in the project. It is essential for everyone involved in the project, including leads and managers, to familiarize themselves with this process.

Additionally, understanding the life cycle helps the project managers & leads to efficiently handle, manage, stay in control, and properly execute the AI application development projects that is fully aligned with the end user requirements.

Stages in the Project Life Cycle of Applied AI

Stages in the Project Life Cycle of Applied AI

Stages in the Project Life Cycle of Applied AI

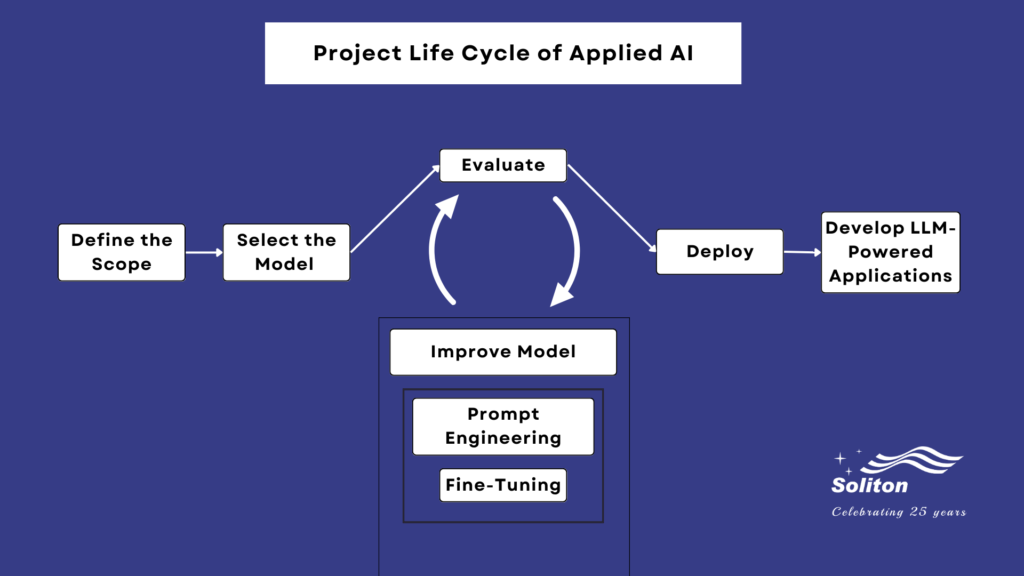

The applied AI lifecycle is a roadmap that guides the AI model’s conception, development, deployment, and maintenance. The project life cycle involves a series of stages, from identifying the scope and choosing the suitable model to evaluating and integrating it with the application.

The project life cycle of an AI-powered application cannot begin in a manner similar to any traditional project, with focus on developing the functionality through logic programming. It is important to acknowledge the need for a continuous evaluation process that involves trial and error and extensive fine-tuning, before a suitable model can be chosen / developed and made ready for deployment. The final stage would be to deploy the model and then develop an LLM-powered application.

Let us delve into some critical stages in the project lifecycle of an Applied AI solution in detail:

Defining the Scope

Defining the scope of the solution is a pivotal stage in the lifecycle. It helps narrow down the project’s use case. This stage calls for deep involvement from project leaders and technical solution architects with customers and users to understand the solution outcomes, pain points to solve, and key benefits that need to be enabled. This information is essential in training LLMs or create user workflows for the application.

Below are some key factors to consider during the scoping process:

- Outcome, benefit, or result that the project expected to deliver.

- Functional and non-functional requirements in the project

- Understanding current and future goals

- Effective phases that the project can be split into to deliver incremental improvements.

- Project Timelines

- Security requirements and,

- Performance requirements.

It is important to understand that perfecting an AI model is not a logical, one-time implementation, but rather a continuous process. This plays an important role in the solution architecture, task breakdown and helps streamline resource allocation, time, and costs efficiently.

In addition to effectively defining the scope of the AI application, it is also essential to consider the following factors into your plan.

Selecting the Right Model

One of the simplest ways to choose a suitable AI model is to first narrow down the pain points and use cases and then select the model that is most suited to solve the problem, rather than force fitting any particular model.

These models can largely be classified into the following types:

- Service-based models (for e.g. Open AI):

-

- These are pre-trained models which do not expose the parameters for any custom training.

- The models are hosted by the provider, and the costs are determined based on the extent of usage.

- Offline Models (For e.g. Llama 2):

-

- These are base models which provide the full flexibility to customize and train parameters.

- These models can be hosted within internally within an organization.

It is highly recommended that we explore existing models in internet marketplaces (like Hugging Face) for use in your AI project.

Another key recommendation for developing an AI-powered solution is to ensure the solution is agnostic to a specific model. Use good architectures, frameworks, and end points to ensure the system is not tied to any single model. Replacing a model with a newer, more effective model in the future can become a simple task if the right levels of abstraction are built in, encouraging developers to choose an existing AI model and build upon it or build and train an AI model from scratch.

Once the right model is chosen, all that is left would be to optimize the model based on cost, performance, and other metrics essential for your solution.

Evaluating the Model

Evaluation is another crucial aspect to focus on when developing and improving the AI solution. It helps ascertain the performance of the base model, its ability to solve critical pain points, and conclude if further training / fine tuning is required.

During this process, it is highly recommended that you invest in setting up pipelines that allow you to continuously evaluate the chosen model and derive specific metrics to determine / improve its capabilities.

The AI model must be rigorously trained to achieve optimal performance and pass the evaluation standards.

Improving the Model

Some ways to train the AI model include:

- Prompt Engineering deals with prompting your LLM to respond to queries in a particular way and teaching specific behaviours. Techniques like One-shot and Zero-shot prompting help train your AI model.

- Fine-tuning might be additionally required if prompt engineering alone doesn’t help achieve the target performance. Fine-tuning is a process where you tweak specific parameters in the model with task-specific examples to tune the model’s output. It is not a full-fledged training process, but instead focuses training on a specific task(s) with limited / targeted data.

- In addition to the above two methods, reinforced learning with human feedback helps the model respond to user queries and deliver the expected result. However, we recommend this as the last option to explore when Prompt Engineering and then Fine tuning does not meet your expectations.

Moreover, adaptive testing mechanisms, where real-time behaviour of the system is monitored and fed back into the evaluation pipeline for future performance evaluations, can also be incorporated.

This stage can be highly iterative and time-consuming, depending on the evaluation parameters and the model’s performance. The more time is spent in this stage, the more perfect the model become.

Deploying the Model

Upon completing the evaluation of the model, the next step in the lifecycle is deployment of the model. Embracing continuous deployment practices with the help of MLOps, ensures that improvements can be seamlessly integrated into the system.

Moreover, valuable user feedback becomes an aid for continuous improvement and ongoing enhancements and helps scale the model for more use cases.

Application Development and Integration

The last step involves integrating the into an existing application or developing a new application, depending on the user requirements. Applications can vary widely, from simple chatbots for interactive user conversations to assistive systems within traditional GUIs. The workflows and user experiences need to be tailored to Leverage the AI model effectively.

Overcoming Challenges When Deploying the AI Model

It is essential to comprehend that stages in the project life cycle are not pre-defined, and a one-size-fits-all approach is not possible. It is instead a continuous learning journey, and with the execution of more projects, project teams can optimize the stages in the cycle, fine-tune their approach, and bring in more automation to adapt to evolving needs.

Understanding and leveraging the life cycle and the various stages of applied AI from defining the scope to application integration will provide an edge when developing an AI solution.

When venturing into the ever-evolving world of AI, the initial stages of developing a project might be challenging. Overcoming the challenges associated with data security, data scarcity, infrastructure, integrations, and computation can pose a hurdle, but the end outcome can be highly rewarding.

This is where Soliton can help.

Leveraging our expertise and proficiency in developing software applications powered by AI, we can help you harness the full potential of Artificial Intelligence and guide you through the process of developing a tailored AI solution to effectively address your unique challenges.

Stay tuned for future blogs where we explore real-world use cases and delve deeper into the fascinating world of AI-powered application development.